Quality at Trellis: Our Vision for Testing and Automation

Quality at Trellis: Our Vision for Testing and Automation

At Trellis, we believe quality and speed can go hand-in-hand. Over the past year, we've doubled down on building a robust testing and automation culture to ensure our fundraising platform remains reliable, scalable, and high‑quality as we grow. A few wake-up calls underscored the need for this initiative: a critical bug during an auction close slipped through, and a 166% increase in bugs and regressions in April 2025 compared to March nearly reaching last fall's peak levels (typically our busiest time of the year). As our customer base expands (and becomes less tolerant of issues due to moving upmarket), it was clear we had to elevate our testing strategy. We set out a "north star" vision for testing and automation that would let us move fast without sacrificing stability or performance. In this article, we'll share how and why we approach testing the way we do at Trellis, what we've implemented, what's on our roadmap, and the reasoning behind our choices.

Buckle up, this ended up longer than I originally expected...

Types of Testing at Trellis

At Trellis, we employ testing strategies at different layers and points in the software development process and release lifecycle (as should you!), each with a specific scope and purpose, to catch issues at different stages of development. These layers range from narrow unit tests to broad end-to-end scenarios, providing multiple safety nets to catch problems.

| Type | What it catches | Tools | When it runs |

|---|---|---|---|

| Unit | Logic bugs in isolated functions/classes | Vitest (Nest/Node); Jest (Angular) | Every commit/PR/release in CI |

| Integration | Service/API + DB interactions in a vertical slice | Vitest; Supertest; Mock Service Worker; GraphQL Code Generator | Every commit/PR/release in CI |

| E2E (UI) | Cross‑layer regressions in critical user journeys | Playwright; Mock Service Worker; GraphQL Code Generator | Every commit/PR/release in CI |

| Smoke | Deployment breakages and basic runtime failures | k6 | After deploy to develop and staging; limited on production; gates promotion |

| Load | Capacity limits and performance bottlenecks under stress | k6; Grafana Cloud k6 | Quarterly; before big launches; after performance changes |

Unit Tests:

These verify individual units of code (functions, methods, classes) in isolation. They are our fastest, most granular tests – for example, confirming a utility function correctly calculates a sum. We run unit tests on every code change via CI, giving immediate feedback and preventing low-level bugs from leaking into later stages.

- Technology:

Integration Tests:

These validate a vertical slice of services and endpoints (typically constructed

around our backend feature libraries), without requiring a full production

environment. They are essentially backend end-to-end tests. We spin up a local

cluster of our APIs and Services with the necessary databases and mock services

needed to exercise a complete backend flow. For example, processing an event

checkout or placing an auction bid to ensure database, server logic, and

external service stubs all work together in the way we expect them to.

Integration tests give us confidence in the server/business logic layer early in

the development cycle and prevent regressions from happening. As we build this

out if we run into an issue in production, we can add that case to our suite of

integration tests to catch it in the future.

- Technology:

- Vitest as the test runner.

- Supertest to making API calls from Vitest.

- GraphQL Codegen for generating the requests we are making to the running cluster being tested.

- Mock Service Worker for mocking out external services, with an IPC bridge so we can control external mocks from the test runner since the cluster is running in separate processes.

End-to-End (E2E) UI Tests:

These ensure full user flows run through the API, down to the database, work correctly. We use Playwright to drive our Angular) applications to simulate real user interactions in a browser against a test instance of our app. These tests mimic critical user journeys from start to finish (e.g., a donor checking in to an event, placing an auction bid, completing a checkout, and many other cases) to verify that all layers integrate properly and result in the expected state. E2E tests are slower and more complex than unit or integration tests, so we reserve them for the most critical and important flows. They give us high confidence that key user experiences will work in production as intended that our Integration tests are not able to provide.

- Technology:

- Playwright.

- Mock Service Worker (MSW) with the same IPC bridge as described above.

- GraphQL Codegen for generating requests to seed data in specific ways for each test case. In the Playwright test we interact with the GraphQL and REST APIs the same way the product does in production to seed test cases. This ensures we are testing up the test cases using the same business logic a user would have used prior to the Playwright test.

Here is an example E2E test for a guest user and a logged-in user paying for a custom donation amount:

%%{init: {"theme":"base", "themeVariables": {"diagramPadding": 20}}}%%

graph TD

A[Start: Create Fundraiser with Custom Donation]:::accent --> B[Test: Guest Custom Donation]:::neutral

A --> C[Test: Logged-in Custom Donation]:::primary

B --> B1[Navigate to Donations]:::primary

B1 --> B2[Enter Custom Amount]:::primary

B2 --> B3[Add to Cart]:::primary

B3 --> B4[Fill Purchaser Info]:::primary

B4 --> B5[Add Payment Method]:::primary

B5 --> B6[Complete Purchase]:::success

C --> C1[Log In User]:::primary

C1 --> C2[Navigate to Donations]:::primary

C2 --> C3[Enter Custom Amount]:::primary

C3 --> C4[Add to Cart]:::primary

C4 --> C5[Complete Purchase with Saved Info]:::success

classDef primary fill:#ebf3ff,stroke:#ced2d3,color:#252525;

classDef neutral fill:#f2f2f2,stroke:#ced2d3,color:#252525;

classDef success fill:#eaffea,stroke:#3b963e,color:#252525;

classDef accent fill:#59acf5,stroke:#145ab8,color:#ffffff;

linkStyle default stroke:#dee1e3,stroke-width:2px;

And here is a much more involved set of tests ensuring that the authentication flow in our checkout is working correctly:

%%{init: {"theme":"base", "themeVariables": {"diagramPadding": 20}}}%%

graph TD

A[Start: Create Fundraiser]:::accent --> B[Test: Log In and Navigate to Summary]:::primary

A --> C[Test: Log In and Request Mailing Address]:::primary

A --> D[Test: Log In with Missing Payment Method]:::primary

A --> E[Test: Log In and Navigate to Ticket Holders]:::primary

A --> F[Test: Guest Sign Up]:::neutral

A --> G[Test: Guest Save Payment Method]:::neutral

A --> H[Test: Guest No Account Creation]:::neutral

A --> I[Test: Logged-in User Logout]:::primary

B --> B1[Add Donation to Cart]:::primary

B1 --> B2[Password Login with Complete Profile]:::primary

B2 --> B3[Navigate to Summary Page]:::primary

B3 --> B4[Verify Purchaser Details]:::success

C --> C1[Add Donation to Cart]:::primary

C1 --> C2[Password Login]:::primary

C2 --> C3[Fill Mailing Address]:::primary

C3 --> C4[Navigate to Summary]:::success

D --> D1[Add Donation to Cart]:::primary

D1 --> D2[Password Login]:::primary

D2 --> D3[Navigate to Payment Method Step]:::primary

E --> E1[Add Ticket to Cart]:::primary

E1 --> E2[Password Login]:::primary

E2 --> E3[Navigate to Ticket Holders Page]:::primary

E3 --> E4[Verify Summary]:::success

F --> F1[Add Donation to Cart]:::primary

F1 --> F2[Sign Up as New User]:::neutral

F2 --> F3[Fill Mailing Address]:::primary

F3 --> F4[Add Payment Method]:::primary

F4 --> F5[Verify Summary]:::success

G --> G1[Add Donation to Cart]:::primary

G1 --> G2[Fill Purchaser Info]:::primary

G2 --> G3[Add Payment Method]:::primary

G3 --> G4[Check "Save My Info"]:::primary

G4 --> G5[Complete Purchase]:::success

G5 --> G6[Verify Account Created]:::success

H --> H1[Add Donation to Cart]:::primary

H1 --> H2[Fill Purchaser Info]:::primary

H2 --> H3[Add Payment Method]:::primary

H3 --> H4[Uncheck "Save My Info"]:::neutral

H4 --> H5[Complete Purchase]:::success

H5 --> H6[Verify No Account]:::neutral

I --> I1[Pre-Login User]:::neutral

I1 --> I2[Add Donation to Cart]:::primary

I2 --> I3[Click Sign Out]:::neutral

I3 --> I4[Verify Logged Out]:::success

classDef primary fill:#ebf3ff,stroke:#ced2d3,color:#252525;

classDef neutral fill:#f2f2f2,stroke:#ced2d3,color:#252525;

classDef success fill:#eaffea,stroke:#3b963e,color:#252525;

classDef accent fill:#59acf5,stroke:#145ab8,color:#ffffff;

linkStyle default stroke:#dee1e3,stroke-width:2px;

Smoke Tests:

This is a small suite (in comparison to Integration and e2e) of sanity-check tests (implemented with k6) that runs against deployed environments (dev, staging, and production) to verify that core functionality is up and running after each deployment. Think of smoke tests as an automated "spot check" that, for example, hits a few important API endpoints or simulates a simple user action on a freshly deployed environment. They aren't deep or long-running, but they catch obvious issues in basic functionality that a deployment could have introduced. We run smoke tests automatically via CircleCI after every deployment to develop and staging, and a subset for production, acting as an early warning system. If a smoke test fails – say, a critical API isn't responding, or a user flow is broken – our deployment will be marked as failed and the team addresses the problem before users notice (hopefully!). In the future, we're considering staged rollouts and automatic rollbacks guided by smoke tests; for instance, using a tool like Flagger to do incremental deployments and roll back if smoke tests detect a failure. This will become much more important as we scale Trellis to multiple regions so we aren't deploying to ALL of Trellis at the same time (like CrowdStrike did that one time...)

- Technology:

Load Tests:

These are heavy-duty performance tests using k6 that stress-test the system under high load. We simulate extreme traffic and usage scenarios (many concurrent bidders, a spike of donations in a short window, etc.) to ensure the platform can handle peak demand and to find any performance bottlenecks. Unlike smoke tests, load tests push the system to its limits (throughput, database load, concurrency) and observe how it behaves when scaled up (and how it responds to scaling events). At Trellis, we maintain a load testing suite covering core flows and run it on a quarterly basis in a production-like environment deployed separately from all other environments, on the same type of Kubernetes (k8) infrastructure that production runs on. (It's intense and not practical to run on every commit, so we run it in scheduled fashion outside the normal CI pipeline and automations for now.) The insights from these load tests help us spot emerging capacity issues or slow queries early, and help inform the work for the upcoming quarter. (In the future, we may automate load tests to run on a schedule or trigger them before major releases, integrating performance checks into CI similar to our smoke and E2E tests.)

- Technology:

Each of these test types builds on the previous. Unit tests catch issues in isolation; integration tests catch issues in how components work together; E2E tests catch issues in full user workflows; smoke tests ensure that deployments did not break the basics; and load tests ensure we meet performance and scale requirements. Together, they create a layered safety net that dramatically improves our confidence in every software release. Which allows us to continue to move incredibly fast and to ship new features and fixes to our customers (even with a small team).

Aligning Testing with Key Initiatives

Our testing strategy is not an abstract "nice to have," it is tightly aligned with Trellis's broader engineering initiatives. In fact, a major catalyst was our recent performance project, "Optimize Data Access and Querying to Reduce Server and DB Load," (I know, not a very exciting name), which aimed to improve system efficiency and scalability across the platform (Blog post on the Valkey backed Model Cache we built is coming in the future, stay tuned!). We realized that bolstering our testing would reinforce that goal in multiple ways:

-

Performance Validation: As part of the performance project, we stood up a dedicated load-testing environment and pipeline to run high-stress tests on realistic scenarios. This means when we optimize a database query or add caching, we can, under production-like loads, validate that the change works as intended and doesn't introduce regressions in performance. The drive to reduce database load is thus baked into our test strategy, every critical user path will not only be functionally correct, but also performant under peak conditions. For example, if we refactor our donation checkout code, we'll use load tests to ensure it still handles a surge of concurrent donors within acceptable response times (p95/p99).

-

Reliability & Stability: The outcome of the performance project goes hand-in-hand with our emphasis on reducing bugs and outages through testing. Bugs are not the only issues we are trying to prevent, we also want to ensure that a (theoretical) bug-free system works the same with 1,000 donors as it does with 1. Strengthening our automated regression tests and smoke tests decreases the chance that a new code change will introduce an issue that puts undue load on the servers or triggers a failure that wasn't caught at a lower level. Thorough testing can also prevent many load-related problems (like an infinite loop or an N+1 database query bug) from ever reaching production. We measure this alignment via our engineering scorecard: we track incidents and regressions that escape to production, and our goal is to drive those numbers down by catching issues earlier at every stage. These incidents and regressions get classified and tracked internally. If classified as an outage, we assign a time value to the incident based on how long the outage lasted and keep track of it to measure our uptime against our goal. In practical terms, we want to see a reduction in high-severity incidents (whether that be a partial or full outage or just some subcomponent of the system going down) and in the kind of bugs that customers report. Our deployment pipeline now has quality gates at every step (pre-merge tests, post-deploy smoke tests, periodic load tests), so by the time code hits production, it has run a gauntlet of checks.

Our testing and automation efforts are not in a silo. They directly serve key company Objectives and Key Results (OKRs). Next, we'll break down the three strategic pillars of our testing vision, each corresponding to a major focus area we targeted in 2025. These were our big "Rocks" for the quarter, and each comes with specific goals and metrics to measure success.

Establishing a Robust Load Testing Infrastructure

Our Objective

Build and operationalize a reproducible load-testing framework that can regularly assess system performance at scale. We set out to create a process where we could simulate our largest fundraising events and highest traffic scenarios on-demand and use the results to inform development decisions for the following quarter.

What We Did

We designed and deployed an automated load test suite covering core platform

flows under stress. Using k6 we simulated high-volume gala

events, with hundreds of concurrent donors and bidders performing actions over

short periods (the kind of traffic we would see at a real event). One scenario

focuses on simulating a flurry of auction bids arriving simultaneously; another

simulates a surge of donation checkouts, etc. To support this, we created a

dedicated "load testing" environment in our infrastructure. This environment is

essentially a full-stack replica of production that we can deploy to for running

our load tests. We extended our CircleCI CI/CD pipeline

to deploy to this environment using our workflows set up to deploy via tags

(vX.Y.Z-lt.V) with the latest code. Then we run the k6 test

suite against it using

Grafana Cloud k6. This

environment uses production-like configurations and data seeds to ensure

realism, mimicking production as closely as possible.

We decided to run the load tests under certain conditions and timeframes:

- A quarterly schedule

- Before big launches

- After system optimizations to validate our assumptions and performance hypotheses

We decided on these conditions instead of on every commit (like our test,

integration and e2e tests) given their intensity. Lighter-weight smoke

tests, however, run on every deployment as mentioned previously. In the future

we are considering automating load test to run during develop, staging and

production deployments and defining success/fail performance criteria as part

of CI.

As part of the load testing process we defined a set of metrics we would observe and compare before and after. For example, for the Model Cache system we built we tracked the before and after of the following metrics:

- Database Query Time: Total time spent querying the database.

- Average Request Time: Average time spent from receiving a request to sending a response.

- Queries Per Second: The average number of queries started per second.

- Latency Percentiles (p90, p95, p99): The percentiles of the response time distribution.

- Breaking Point: More of a qualitative measure, this attempts to determine at what point the system starts to break down.

We assigned clear ownership for this load testing process to a developer on the team. This developer ensures the k6 tests stay up to date with new features, schedules the quarterly test runs, adds new testing scenarios, and drives any follow-up actions from the results. By having an owner, we treat load testing as an ongoing practice and not a one-off project.

Expected Outcomes

By investing in load testing infrastructure and process, we aim to surface performance bottlenecks proactively and increase our confidence that Trellis can handle surges in usage. We measure success through a few key metrics:

Load Test Coverage

We want to cover as close to 100% of our critical user flows in the load test suite. In other words, every high-traffic scenario (donations, ticketing, bidding, check-in, etc.) should have a stress test. By the end of the quarter we hit that goal for initial critical flows, and we plan to keep expanding the suite as new features emerge. These initial flows cover:

- Attendee Check-In: Attendees being checked in via name search and check-in flow.

- Auction Bidding: High concurrency silent auction bidding.

- Checkout: Focuses on concurrent cart modifications and checkouts.

- Fund-a-need (FAN) Donations: Think of FANs as a live roll call of donations being given at an event.

- Buy Now Items in our Sales Entry System (SES): Our SES is meant for volunteers and organizers to process anything being sold under the fundraiser on the spot while at the event.

- Golf Tournament/Gala Scenario: A composite test involving check-in, auction bidding, and checkout timing for an event, structured in the same way as an event would be run, with an influx of check-in happening at the start and petering off with checkouts and bidding ramping up from there.

Performance Trends

We track average response times, throughput, and error rates under load for each scheduled test. Our goal is to see stable or improving performance trends over time, no degradation as we add features and make changes, and to spot any anomalies quickly. If the metrics show regression beyond our set thresholds, that triggers an immediate investigation before the code goes live.

Incidents Caught Pre-Production

Perhaps the most important metric is qualitative: the number of performance issues or capacity limits we discover in load testing before they affect real customers. Each load test run is an opportunity to catch things in a controlled environment. A "win" might be discovering that a database query starts to slow down at 10,000 concurrent users – and then fixing it long before any client hits that scale. Every issue caught and fixed from a load test is an incident or outage that never had to happen in production.

Smoke Test "Safety Net"

We also monitor our smoke tests as an early warning system. We track how

frequently they catch problems in lower environments (develop and staging)

that could have become incidents if deployed further. Our goal is to catch the

vast majority of issues in these automated tests so that production remains

quiet and stable.

Achieving this load testing goal means that by the end of the quarter, we know our system's breaking points (if there is one and if we found it) and have confidence in its scalability. We can support larger fundraisers and higher user traffic without scrambling. To put it another way, we don't want to be surprised by success. If a charity event on Trellis suddenly has ten times the usual traffic, we should have already tested that scenario and know we can handle it. In the long run, this underpins our vision of a platform that self-scales with zero manual intervention, where no latency issues occur even during massive traffic spikes.

Improving System Stability & Traceability

Objective

Enhance how we monitor, measure, and respond to system issues in production. While testing helps prevent issues, we also need great observability and incident response when things do go wrong. Our goal was to implement a clear stability reporting toolkit and process so that we know about issues before customers do and can trace problems to their root cause quickly.

What We Did – Tooling

We audited and standardized our monitoring and alerting stack to ensure we have full visibility. This included doubling down on tools like Sentry (for error tracking on front-end and backend), BetterStack (for uptime, logging and infrastructure monitoring), and Apollo Studio (for GraphQL error and performance analytics). We tuned the configuration of these services to reduce "noise" – filtering out or aggregating non-actionable errors so that alerts really mean something. For example, if an external API occasionally times out but auto-recovers, we might log it but not require action from the team at 2am. Conversely, we adjusted what "down" means in our uptime checks to be more aggressive in detecting problems (even a few seconds of downtime in an API triggers an alert). Alerts pipe into Slack and email with the relevant context. To our goal, if something goes wrong in production, we'll see it and hear about it right away, with enough details to start diagnosing.

We introduced a clear incident reporting process as well. We created a standard Incident Report template in Linear that the team fills out for any major incident (e.g. a significant outage or non-downtime incident that is deemed critical enough). The report captures the root cause, customer impact, how it was resolved, and most importantly lessons learned and follow-up actions. The act of writing these post-mortems has become part of our team culture. They ensure we reflect on how to prevent the issue in the future (maybe by adding a test, improving monitoring, or adjusting processes).

We also defined what constitutes a "Major" vs. "Minor" incident to bring clarity and consistency. Major incidents are things like a site-wide outage or data loss, i.e. anything our users definitely notice. Minor incidents might be transient issues that self-recover or affect only a small subset of users (maybe one endpoint had some off of bug, regression or issue). For minor issues, we don't necessarily sound the alarm, but we do log and review them. In fact, we've set up an #incidents Slack channel where BetterStack posts any uptime blips and/or auto-resolved issues; part of this initiative was making sure even those minor hiccups get visibility so we can track if they start happening more often and address underlying causes.

We also implemented a weekly "error review" process. Each week, the developer responsible for one of the error tracking tools looks at the latest errors and warnings coming in from Sentry, Apollo Studio, etc. The mandate is to triage everything: if an error represents a real bug or regression, file a ticket for it; if it's an artifact of user behavior or a known non-issue, mark it as such or filter it out. This keeps our backlog fed with any new bugs that slip past tests, and it prevents excessive noise by cutting out non-issue and/or non-actionable reports. This process has already paid off, for example, we discovered an edge-case bug in our integration with a third-party API from a Sentry alert that happened only for a specific customer data condition, and we fixed it before it escalated to a support ticket.

Expected Outcomes

We'll consider this initiative successful when we have both the tools and processes in place to catch issues early and respond methodically. This process and tool implementation is still in on-going and will be for a while as we figure out how best to leverage tools, what processes work us and the benefits we are getting. We are watching these key metrics and results:

Major Incidents

We aim to drive the number of major, user-visible outages as close to zero as possible. By quarter's end, we did see a reduction. While Major incidents are a lagging indicator, if our pre-production testing and minor-incident handling are effective, major incidents should be very rare. We haven't had a true P0 outage in months, and we intend to keep it that way. Each major incident gets an Incident Report tracked in Linear that is shared company-wide. These incident reports are written in a way that they are digestible for everyone on the team, regardless of technical knowledge. Each major incident will have a time value assigned to it for how long we are "down" for, which gets tracked against our uptime target.

Our ultimate goal: zero downtime incidents in a quarter.

Minor Incidents

For smaller hiccups that auto-recover, our goal isn't zero (some transient issues are inevitable in any system), but we want them to be infrequent and resolved extremely quickly. We track how many minor issues occur and the cause so they can be triaged and dealt with. The trend we want is a downward or stable count of minor incidents, and a swift resolution time for each. If minor issues spike or take too long to fix, that's a signal to improve somewhere (maybe add a new test, or enhance our auto-healing/auto-recovery mechanisms). Thus far, with better monitoring, we're catching even subtle issues and ensuring they don't turn into larger problems.

Regressions Caught vs. Missed

We closely monitor how many bugs make it to production that should have been caught earlier (we label these as regressions). This is tracked in our weekly Customer Reports and development scorecard. We want to see the number of regressions that hit production start dropping each week. And indeed, as our new E2E tests and smoke tests have come online, we've observed a declining trend in new regressions reaching customers. Our goal is that any time a bug does slip through, it's a truly novel edge case, not something in a flow we knew to test. Regressions to use are simply: "It was working the majority of the time, now it is not"

Following (Trusting in the?) Process and Improving

We measure whether our new processes are being followed, what we track:

- Did we do the error review every week this quarter?

- Did we produce incident reports for all qualifying incidents?

- Are our efforts resulting in fixes?

In each of our Customer Weekly reports (filled in each Monday, shared with the team on Tuesday), we report on for the last 3 weeks (with a +/- delta on each stat):

- Results of the current Load Test, if there are any action items being addressed, and results to report.

- Uptime, # of Major and Minor incidents (separately), Notes (links to Linear Issues), and a downtime Metric (if applicable).

- Regressions, # of regressions that week (target of 0) and Notes (typically, Linear Issues created in connection with any reported regressions).

- Smoke Tests, # of smoke tests running, target # of smoke tests running, # of errors caught by smoke tests.

- E2E Tests, # of E2E tests running, target # of E2E tests, Notes (typically issues found and fixed as part of writing new E2E test cases), and # of regressions and/or bugs discovered as part of writing the tests.

By focusing on stability and traceability, we have created (and are still improving on) an environment of continuous improvement. Every outage or error becomes a learning opportunity to make our platform better, either by adding a test, refining our monitoring, or hardening the code. Over time, this yields a positive cycle: fewer incidents, faster detection, and a reputation for reliability that gives our customers confidence. As our leadership often emphasizes, achieving operational excellence is key to Trellis's success, and this pillar ensures we have the systems and accountability to support that excellence. We've already seen a culture shift internally, developers are now proactive about monitoring dashboards and proud to report "nothing of concern" in the weekly review, and when something does happen, there's a structured follow-up, with a learning opportunity everyone takes part in. These learning opportunities are constructively critical of the systems, processes, and code. Every developer, product manager and designer takes an interest in what we can do to improve and prevent it from happening again. It's a big step toward making Trellis a highly reliable and stable platform.

Automating Regression Testing for Critical Flows

Objective

Prevent regressions from ever reaching production by continuously testing our most critical user flows end-to-end. In other words, build an automated regression test suite that runs all the time (as part of CI) and covers the highest-risk scenarios for our business, so that if anything breaks in those areas, we catch it within minutes. This was our answer to the spike in regressions we saw. To ensure we never have a repeat of that April surge we are beefing up automated QA in the right places.

Identifying Critical Flows

We began by clearly defining what our "critical flows" are. These are the user journeys that, if broken, would severely impact customers or require urgent hotfixes. Based on past incidents and our product knowledge, we identified candidates like: user login and onboarding, payment checkout (donations and ticket purchases), auction bidding and auction closing flows, and event check-in process. We looked at where we've had P1 support tickets or urgent issues, for instance, auction closing logic had a bug last year. Interestingly, the regression spike in April helped highlight some areas of code churn that needed better test coverage; for example, a lot of changes were happening around our check-in and ticketing flows during that period, indicating we should fortify those with automated tests.

Building the Tests

For each critical flow, we are creating an end-to-end test case that simulates a real user performing the entire journey. On the front-end side, we use Playwright (headless Chrome, Webkit, and Firefox) to drive our Angular application's UI for things like login, clicking through a donation, entering payment info, etc. On the backend side, for flows that don't involve a UI (like an automated auction closing job that runs on the server), we are writing what are essentially integration tests that trigger those processes and verify the outcomes. We already had a strong foundation of backend feature tests in our codebase, so we often built on those. We made sure to stub out external dependencies – for example, when the test hits an email-sending step or a payment gateway, we intercept those calls so we're not relying on third-party services in our CI environment. We leverage tools like Mock Service Worker (MSW) and in-memory databases to simulate external systems and data, ensuring the tests run reliably every time.

One of our guiding requirements was test reliability. Flaky tests can do more harm than good, so we invested in making these E2E tests as deterministic as possible. That meant generating stable test data, using proper waits for asynchronous actions (no sleeping arbitrary times – we wait for specific UI elements or server responses), and resetting state between tests. We iterated on these tests until they were passing consistently. In fact, we treated any flaky behavior as a bug to be fixed before integrating the test into our main suite.

We also put an emphasis on developer experience: making it easy to add new

tests, run tests, and maintain them. We built a set of helpers and abstractions

for common actions, for example, a helper to create a test fundraiser event via

the API (so you have a fundraiserId to work with), a helper to simulate a user

login, among many many others. This dramatically reduces the boilerplate each

new test needs and ensures we are seeding data in the way data is being created

in production. Thanks to these utilities, adding a new E2E test for a given flow

now takes on the order of a day or two of work (at most, depending on if new

utilities need to be added), whereas initially it might have taken quite a bit

longer to scaffold out these tests. Our goal is that whenever a new critical

feature or incident area is identified, any developer on the team can spin up a

new E2E (or integration) test for it in short order, without frustration. This

ease-of-use was key to broadening coverage – developers are much more willing to

write tests if the framework helps handle the heavy lifting.

Continuous Integration

Once the initial critical flow tests were ready (we started with one so that we have the automation framework in place), we integrated them into our CircleCI pipeline. Now, every time a developer opens a pull request, commits a change to a PR, merges to the main branch or releases to any of our environments, the CI will run not only the unit and integration tests, but also these critical E2E scenarios (in an isolated test environment against the full Trellis cluster spun up in the CI workflow). If any critical flow test fails, the CI build is red and blocking, just like a unit test failure would be. This is incredibly important for us because it means if a change inadvertently breaks, say, the donation checkout process, we find out within minutes during CI – not later that day when a donor or customer encounters the issue (or worse, a donor reports it to one of our customers). We've already had " saves" from this system: for example, a recent change would have caused a bug in our donor checkout flow; our E2E test caught it in CI, we fixed the bug on the spot, and it never made it to production. Additionally, we have had one case where we found an existing bug in an interesting set of user steps with magic links which was then fixed. These kinds of catches are exactly what we hoped for, every time we catch an issue ahead of time or find an existing bug we prevent a report from a customer and potentially a larger incident.

We monitor the results of the E2E suite closely. We log any failures and analyze

whether it was a legitimate issue or (in the rare case) a test fragility issue.

On the rare occasion a test is flaky, we treat it the same way as we do flaky

integration tests, we treat it as a bug that needs to be fixed. The integrity

of this suite is paramount.

Expected Outcomes

Strengthening our automated regression suite for critical flows has several expected benefits, which we're already beginning to see:

Fewer Regressions in Production

Put simply, our goal is to catch issues in covered flows 100% of the time. As we added tests for each critical flow, we expect the number of regression bugs (especially serious ones) that reach production to plummet. Our long-term aspiration is zero critical regressions hitting customers (obviously). Practically, this quarter we've already seen a sharp decrease in hotfixes and urgent rollbacks. Each critical flow added to the suite is like closing the door on an entire category of bugs.

Confidence to Iterate Fast

When developers see that "green build" in CI, it now carries a lot more weight.

It means not only did the code compile and basic tests pass, but every

mission-critical user journey still works. This gives us the confidence to

deploy continuously and iterate quickly without fear. Culturally, this was a big

deal, we already moved very fast as a development team but, it now helps

alleviate the anxiety that developers or product managers sometimes felt when

releasing changes to core parts of the system. It supports our philosophy of

"fast iteration without being constrained by fear." We can try bold changes

or refactors, because if we break something crucial, our CI tests will call it

out immediately, before it even gets to our develop environment!

Measuring Coverage

We track how many of the identified critical flows are automated. As of writing, we had about 64 unique flows (in the donor facing checkout flow), and we're continuing toward adding more cases around authentication, check-in, SES, and some other major flows. This is now a metric on our engineering scorecard: "# of critical flows covered by automated tests." It provides visibility and accountability, if something is deemed critical but isn't yet tested, we all know it's a gap to fill. Going forward, as new features roll out or if we discover a new area of risk (say a new integration or a part of the app that starts seeing a lot of use), we'll add it to the critical flows list and automate it as part of the project, our projects don't only include the "business logic" code, they include everything needed to consider the project "done", a major part of that now is the automations.

Faster Debugging & Incident Prevention

When a test does fail in CI, it often provides immediate insight into what went

wrong, because it's tied to a user scenario. Instead of a vague bug report days

later, we get a stack trace and a reproducible scenario at the moment the code

was introduced. This short-circuits the diagnose-fix cycle dramatically. And by

analyzing any production issues that do occur, we can ask "Would an automated

test have caught this?" If yes, we add one to prevent it next time. If not, then

we consider why we cannot test that case end-to-end and if there are smaller

pieces we can end-to-end test, whether via our e2e

Playwright tests or integration tests. This feedback

loop ensures the suite's coverage keeps improving. In fact, part of our incident

post-mortem process now is to consider writing an E2E test if the issue was in a

critical area and wasn't already covered.

Ultimately, automating regression tests for critical flows has been about

bringing that safety net closer, so we don't fall as far. It lets us develop and

ship features quickly (we deploy to production sometimes numerous times a day,

and to our develop environment dozens of times a day), while meeting the

quality bar our customers expect. The net effect is fewer mid-fundraiser fire

drills and emergency patches, and a smoother experience for users. Our vision of

stress-free continuous deployment is becoming reality with this solid foundation

of automated checks.

Automation in the CI/CD Pipeline

A core principle of our approach is that all these tests run automatically (or as our CEO says: Automagically) in our Continuous Integration/Continuous Deployment (CI/CD) pipeline. Integrating testing into CI means it happens consistently and doesn't rely on humans remembering to run test suites locally.

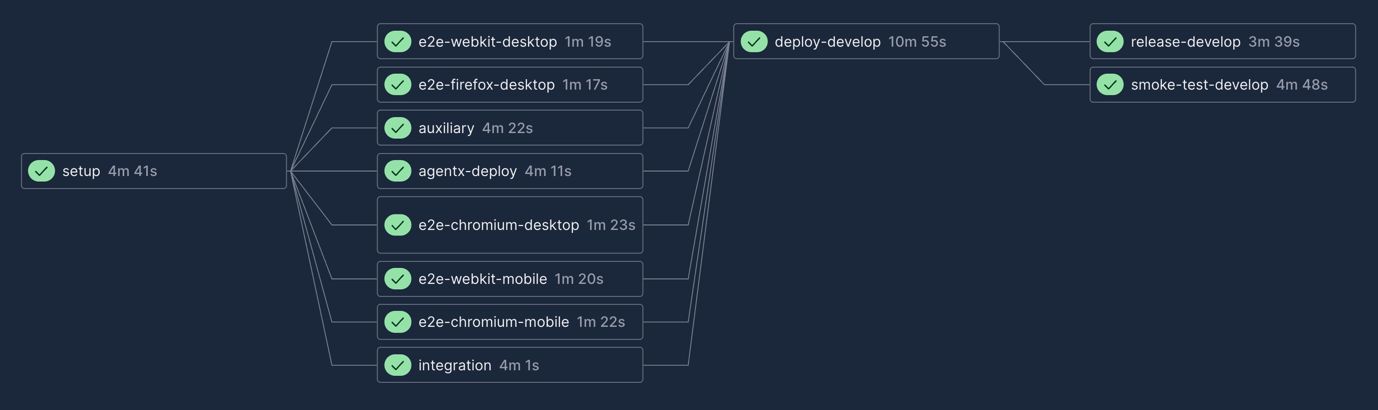

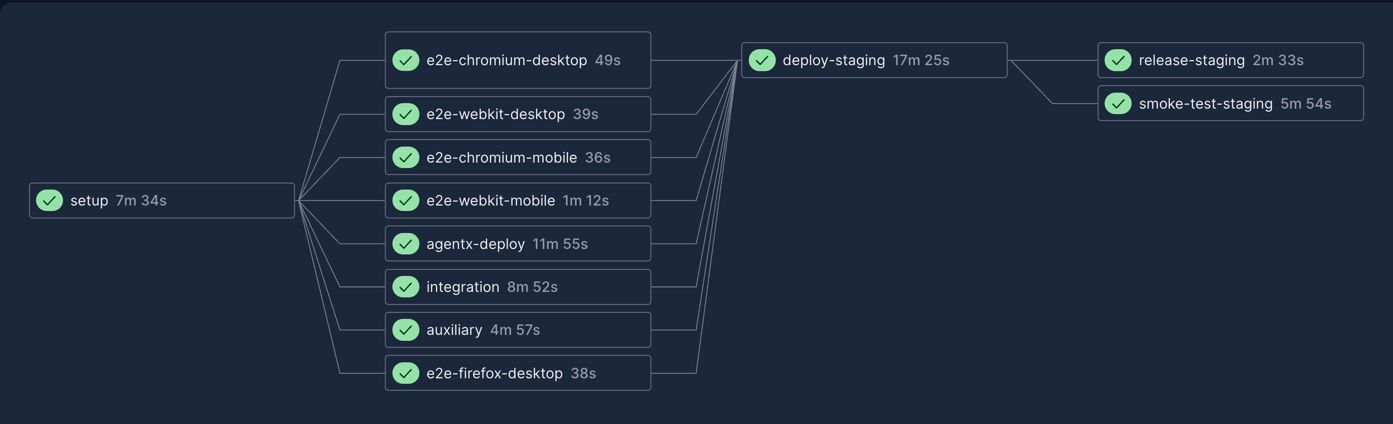

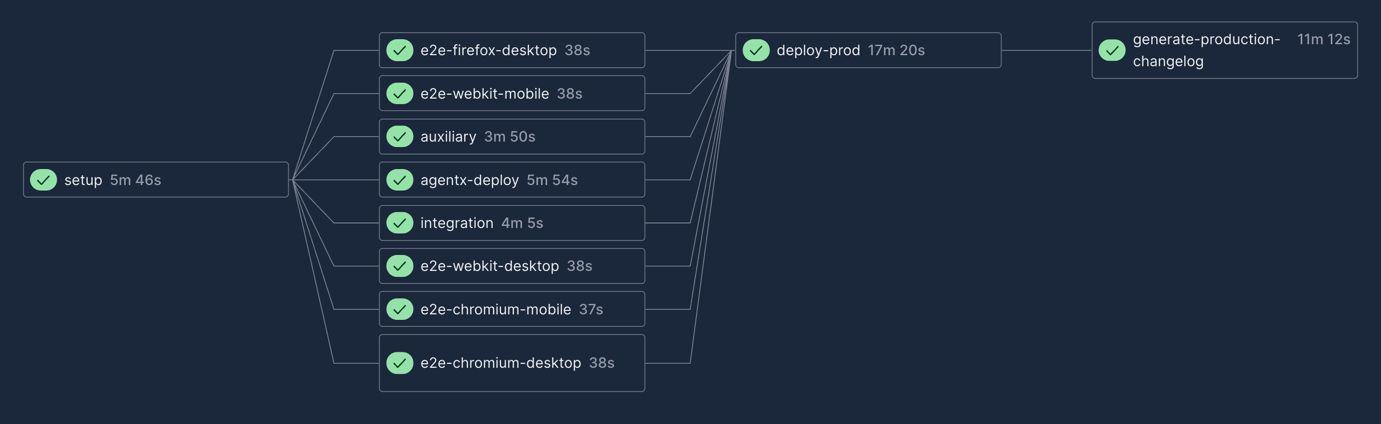

To get a visual sense of what runs when and where, here are some screenshots from our CircleCI workflows (don't take too much stock in the timings, each of these workflows will have a different caching state for our Nx targets):

Pull Request Workflow

TODO screenshot

Develop

Staging

Production

Pre-Merge Checks (Every Commit)

On every push to a pull request, CircleCI kicks off our build which runs:

- Unit tests (Vitest and Jest): Fast granular tests.

- Integration tests (Vitest + Supertest): Backend E2E tests.

- E2E tests (Playwright): UI E2E tests of our

Angular applications. These run on a matrix of

mobileanddesktopwithwebkit,chromeandfirefox. - Lint (ESLint): Static linting to ensure we are following correct coding patterns and usage.

- Storybook: Builds and compiles all of our Storybooks (we don't currently use Storybook UI testing).

- Compilation (Angular, NestJS, Lambda): Builds and type checks our applications.

- Among many other automated checks

If any step fails, the PR cannot be merged. This gives developers immediate feedback, if you break a utility function or a piece of business logic, you'll know within minutes. It encourages developers to run tests locally and keep the suite healthy. By the time code is ready to merge, it has already passed potentially thousands of these checks.

Automated Smoke Tests on Deploy

We use a continuous deployment approach, so when code is merged to the develop

branch, our CI automatically deploys it to our environment. At that point, we

trigger the smoke test suite to run against the freshly deployed environment.

This is an external check, hitting the deployed services (as opposed to the

local servers used in earlier tests that are spun up in CI). If the smoke tests

pass, it's a signal that the core features are working in the live environment.

We do the same when promoting to staging, and even for production deploys we

plan to run a limited set of smoke tests post-deployment to ensure nothing

obvious is wrong. Importantly, if a smoke test fails in develop or staging,

we halt the promotion to the next stage. For example, if a staging deployment

fails a smoke test (perhaps an API isn't responding), we stop and fix it

before pushing that release to production. This adds a final layer of

guardrail – no deployment goes fully live without a quick battery of end-to-end

checks in a real environment. In the future, we're looking at tying this into

incremental rollouts: e.g., deploy to a small percentage of production (with a

tool like Flagger), run smoke tests, and automatically

roll back if something's amiss. That would give us even more confidence in

automated deploys.

Scheduled Load Tests (Outside Normal CI Flow)

As mentioned, our heavy load tests are not run on every code change (they'd slow

the pipeline too much). However, we have partially integrated their execution

into our tooling. Our long term goal is to have a

CircleCI job that can be triggered (manually or on a

timer) to deploy the latest code to the special load-testing environment and run

the k6 load test suite. This uses the same automation scripts

as any other environment deploy, which makes it reproducible. The results of

load tests are collected and can be uploaded as artifacts or sent to our

dashboards for analysis. While currently this is a manual trigger (e.g., a

developer kicks off the quarterly test run by tagging a release), we've scripted

everything so that it's easy and consistent (via our bun run release command).

In the future, we might integrate this to run on a schedule or as part of a

release candidate process, once we're confident in the process and have the

infrastructure to handle it routinely.

All of this is orchestrated through CircleCI by leveraging Nx (if you haven't read it, check out our article on Nx here, and it's Task Graph), giving us a centralized view of quality. We have configured our pipelines to provide fast feedback for developers (unit, integration and e2e tests are parallelized and optimized to finish quickly) while still incorporating the deeper, slower tests in a manageable way. For example, we isolate the long-running tests to parallel CI workflow steps so they don't block feedback from faster running targets, but they still must pass before code goes live or gets merged. The end result is a deployment pipeline where quality gates are fully automated: if the pipeline is green, we have a high degree of confidence in the build/changes; if it's red, something is wrong, and we fix it before proceeding. This approach has significantly reduced the risk of bad deploys. In fact, deployments have become almost "boring" – which is a good thing! We often deploy with the expectation that nothing will go wrong, and thanks to the pipeline, that expectation holds true.

Guiding Principles and Long-Term Vision

Implementing all these testing initiatives, we've kept a few guiding principles in mind that shape how we think about quality at Trellis:

"Test Early, Test Often"

We integrate testing at every phase of development. Writing unit tests for new functions and integration tests for new features is a normal part of our definition of done, not an optional step. Issues caught early (in a developer's local run or in CI pre-merge) are exponentially easier and cheaper to fix than those found later in production (and don't carry the risk of customer churn). This principle is reflected in our CI setup: every commit faces a gauntlet of tests, providing quick feedback to developers. By the time code is merged, it's been vetted thoroughly, which means faster, safer iteration.

Focus on Customer-Critical Flows

Not all tests are created equal. We prioritize automating the features and scenarios that matter most to our users and business (I wish we had the resources to automate literally everything, but alas). If forced to choose, we put our effort into testing high-impact areas (payments, event operations, key integrations) over low-risk corners of the app. This principle drove our whole regression testing initiative. It's about protecting what's most vital first. It also aligns with our company ethos of delighting users: a platform that "just works" for the important things will earn trust. So we make sure those important things are rock-solid.

Performance and Reliability are Features

We view non-functional requirements, like speed, scalability, and uptime, as first-class features of the product. A slow or flaky system is considered just as unacceptable as a functional bug. This mindset is why we invest in load testing, automation, and robust monitoring. It guides our trade-offs: if a certain test significantly slows down our pipeline, we'll find ways to optimize it rather than dropping it, because a fast feedback loop is essential for developer productivity. Likewise, if our tests aren't covering performance, we add scenarios that do. We don't pat ourselves on the back for a release that "works" functionally if it's degraded in speed or reliability. The job isn't done unless we meet our quality bar under real-world conditions.

Maintainability of Tests

Tests are code. We treat them with the same care and craftsmanship as production code. This means code review for tests, refactoring test code when it gets messy, and avoiding flaky or brittle test patterns. We strive to keep tests deterministic and simple by using realistic but controlled data, cleaning up state between runs, and avoiding inter-test dependencies. This discipline ensures that our test suite remains an asset, not a burden. It keeps developer confidence high – when a test fails, you can trust it's a real issue, not just "the test being weird."

Team Ownership and a Culture of Quality

Perhaps most importantly, we foster a culture where quality is everyone's responsibility. Writing and updating tests isn't just the QA developer's job (in fact, we don't have a separate QA team – our developers and product manager/designer own quality). Every engineer is expected to care about testing and automation. It's common to hear in code reviews, "Looks good, but did we add tests for this?", said in a positive, reinforcing way. We celebrate when our tests catch a bug before release; those are shared victories for the team and proof that our system and process works. Likewise, when a bug does slip through, we take it blamelessly as a learning opportunity: how did this escape, and how can we strengthen our process so it doesn't happen again? Over time, this has built tremendous trust within the team that our automated tests are a safety net, not an obstacle.

Looking ahead

Our long-term vision is ambitious. We want to reach a point where our platform is so well-tested and robust that we can deploy continuously with zero fear of breaking things (assuming we do acceptance testing of course! I would have to answer to our product manager/designer otherwise...), and where production incidents are exceedingly rare. At any point, our production systems should be deterministically stable such that we could step away for any period of time and never need to open a computer. Practically speaking, here's what we envision in the not-too-distant future:

-

A (nearly) fully automated continuous deployment pipeline where code flows to production multiple times per day, gated by a comprehensive, near-flawless test suite. Releases become routine and uneventful because every change has been exercised thoroughly in CI. Pushing a button to deploy just isn't nerve-wracking anymore, it's as ordinary as merging a pull request.

-

A rich dashboard of quality metrics that the team reviews regularly, things like test coverage, pass/fail rates, performance metrics from load tests, number of incidents, etc. could be included here. This keeps quality front-and-center. It also provides transparency – anyone in the company can see how we're doing on reliability and quality at a glance.

-

The ability to scale effortlessly for major events and surges. Because we've done the homework with load testing and have real-time monitoring in place, we should rarely be caught off guard by high traffic. Our platform should handle big fundraising galas or viral campaigns without breaking a sweat. Where we can't fully automate scalability, our tests and alerts will guide us to intervene before any customer notices an issue. We're laying the groundwork for that by understanding our capacity limits through testing.

-

A company culture that continually reinforces quality. This means onboarding new developers into our testing philosophy from day one. The idea is that high automation actually enables high speed, they are not at odds. Because when tests have your back, you can make changes more boldly. We've seen that mindset take hold: our team knows that investing a bit more time in writing a test today can save a ton of time debugging tomorrow. This will only grow as our processes mature.

In conclusion, our vision for testing and automation is our roadmap to world-class reliability and quality at Trellis. By clearly defining our test types, aligning testing initiatives with strategic company projects, and setting concrete goals and metrics, we've made quality a measurable, achievable objective rather than a vague ideal. With every critical flow covered by automated tests, every deployment vetted by smoke tests, and every performance issue explored by load tests, we will significantly reduce bugs, avoid performance bottlenecks, and iterate quickly without compromising trust. This approach is now our north star, guiding us quarter by quarter toward a platform that not only meets our users' needs, but truly exceeds their expectations for stability and performance.

If you have questions about our testing approach or want to share how your team tackles quality, feel free to reach out! We are always happy to share more about how we do things at Trellis! And if ensuring software quality at scale excites you, keep an eye on our journey – at Trellis (a fundraising platform that helps charities run more effective events), we're proving that fast-paced development can live in harmony with rock-solid reliability. We hope our insights help you in your own quest for better testing and automation.

What to learn more about how we develop at Trellis? Check out these blog posts!